1. A Shift in AI Focus: Efficiency Over Volume

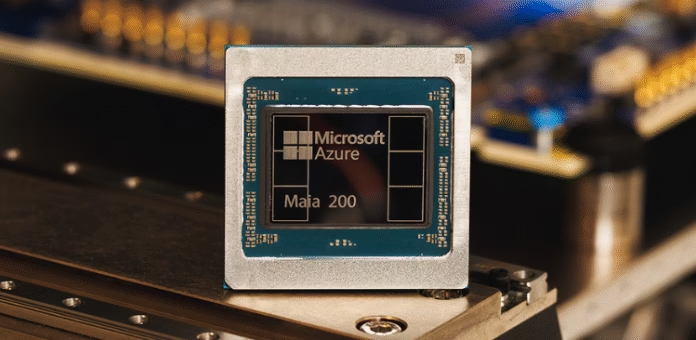

Microsoft has introduced Maia 200, its second-generation custom AI inference chip, signaling a strategic shift in how AI performance is measured. Rather than focusing only on how many tokens an AI model can generate, Microsoft is emphasizing how efficiently those tokens are produced.

Positioned as Microsoft’s most powerful custom accelerator to date, Maia 200 is purpose-built for AI inference—particularly for large reasoning models that underpin modern, agent-driven AI systems.

2. Built for the Inference Era

Maia 200 is designed to operate across heterogeneous AI environments, supporting multiple models and workloads simultaneously. Microsoft describes it as the most efficient inference system it has ever deployed and claims it outperforms any other first-party cloud accelerator currently available.

Unlike other hyperscalers that built chips primarily around their own tightly coupled stacks for both training and inference, Microsoft is treating inference as the long-term “landing zone” for AI. The chip reflects a belief that agentic, real-world AI applications will rely more on scalable, cost-efficient inference than on constant retraining.

3. Performance Claims and Competitive Positioning

Microsoft says Maia 200 delivers significant gains over rival accelerators from Amazon and Google. According to the company, the chip provides:

Substantially higher performance for low-precision workloads critical to inference

Stronger throughput at both 4-bit and 8-bit floating-point operations

Larger and faster high-bandwidth memory, enabling models to stay closer to compute

In practical terms, Microsoft claims Maia 200 can comfortably handle today’s largest AI models, while leaving room for even more demanding systems in the future—all at a lower cost per unit of performance.

4. Memory-Centric Design for Faster Inference

A defining feature of Maia 200 is its redesigned memory architecture. The chip combines:

Large pools of high-bandwidth memory (HBM)

On-chip static RAM (SRAM)

A specialized direct memory access engine

A custom network-on-chip fabric

Together, these components are engineered to move data rapidly and efficiently, improving token throughput and reducing latency during inference. Microsoft says this architecture allows AI models to operate closer to peak performance under real-world workloads.

5. Optimized for Multimodal and Agentic AI

Maia 200 was designed with modern AI use cases in mind. Enterprises increasingly expect AI systems to reason across text, images, audio, and video—often in multi-step workflows that resemble autonomous agents.

The chip supports this shift by serving multiple models within Microsoft’s AI ecosystem, including the latest GPT-5.2 family. It integrates directly with Azure and underpins services such as Microsoft Foundry and Microsoft 365 Copilot. Microsoft’s internal superintelligence group also plans to use Maia 200 for reinforcement learning and synthetic data generation.

6. Manufacturing and Technical Advantages

From a hardware standpoint, Maia 200 is produced on a 3-nanometer manufacturing process—more advanced than the nodes used by competing hyperscaler chips. Analysts note that this contributes to its advantages in compute density, memory bandwidth, and interconnect performance.

However, experts caution that enterprises should validate real-world performance within Azure before shifting workloads away from established platforms like Nvidia. They also stress the importance of ensuring that Microsoft’s internal cost savings translate into tangible pricing benefits for customers.

7. Learning From Past Challenges

Earlier versions of Maia faced development and design setbacks that slowed Microsoft’s progress in custom silicon while competitors accelerated. Analysts say Maia 200 reflects a more mature approach—combining lessons learned with access to OpenAI’s intellectual property and closer alignment between hardware and software.

This evolution could significantly reduce Microsoft’s long-term infrastructure costs, especially as inference workloads scale across its cloud.

8. A Complement, Not a Replacement

Microsoft is careful to position Maia 200 as complementary rather than competitive with GPUs from Nvidia or AMD. The goal is flexibility: offering customers optimized inference options alongside traditional accelerators.

The company’s open software stack is designed to make deploying inference workloads on Maia straightforward, with minimal friction for developers already building on Azure.

9. Availability and Developer Access

Maia 200 is currently live in Microsoft’s US Central data center region, with additional deployments planned in other locations. Developers can access a preview software development kit that includes tools such as PyTorch integration, a Triton compiler, optimized kernels, and low-level programming support tailored to Maia’s architecture.

The Bigger Picture

With Maia 200, Microsoft is making a clear bet that the future of AI lies in scalable, cost-efficient inference not just raw model size or training power. By embedding this capability directly into Azure’s infrastructure, Microsoft aims to make advanced AI feel native, accessible, and operational at enterprise scale.